The Risks of Over-Reliance on Generative AI in Product Development

Generative AI is revolutionizing product development, but are we becoming too reliant on it? From "shiny object syndrome" to the "black box" problem, overdependence can lead to many issues.

Hey there, tech leaders, founders, and AI enthusiasts! 👋

We're diving deep into the risks of over-relying on generative AI in every product solution.

While these tools offer incredible potential, it's crucial to remember their limitations and potential issues.

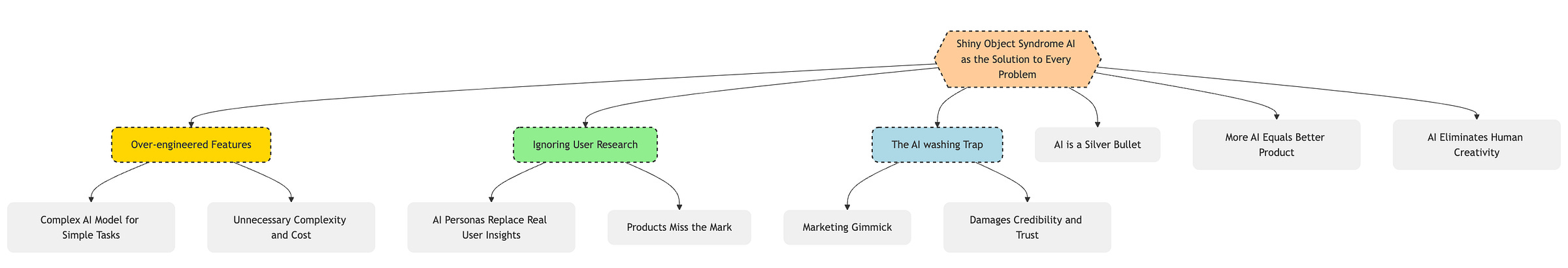

👉 Shiny Object Syndrome: When AI Becomes the Solution to Every Problem

It's easy to get caught up in the hype surrounding generative AI.

It seems like there's a new model or tool launching every day, promising to revolutionize how we build products.

But this can lead teams to shoehorn AI into every aspect of development, even when it's not the best solution.

➡️ Specific Examples:

Over-engineered features: Imagine a team using a complex AI model to generate product descriptions when a simple template-based approach would suffice. This adds unnecessary complexity and cost without significant user benefit.

Ignoring user research: Relying solely on AI to generate user personas or predict user needs can lead to products that miss the mark. AI should augment, not replace, real user insights.

The "AI-washing" trap: Slapping an AI label on a product just for marketing purposes, even if the underlying technology is rudimentary, can damage credibility and user trust.

➡️ Common Misconceptions:

AI is a silver bullet: Many believe that AI can solve any problem, but it's crucial to remember that AI is a tool, not a panacea.

More AI equals better product: There's a misconception that adding more AI features automatically makes a product superior. In reality, it's about using AI strategically to enhance user experience.

AI eliminates the need for human creativity: Some fear that AI will replace human creativity, but in product development, AI is best used as a tool to augment and amplify human ingenuity, not replace it.

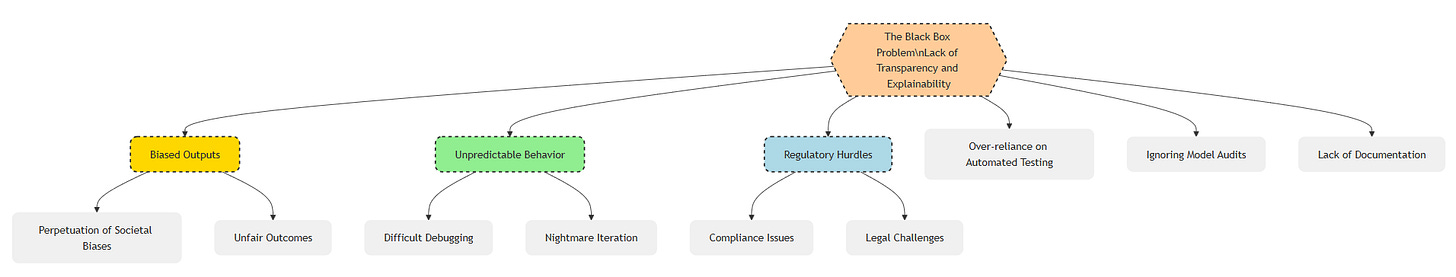

👉 The Black Box Problem: Lack of Transparency and Explainability

Generative AI models, especially deep learning models, are often referred to as "black boxes." This means their decision-making processes are opaque, making it difficult to understand why a model produced a particular output. In product development, this lack of transparency can have serious consequences.

➡️ Specific Examples:

Biased outputs: AI models are trained on data, and if that data reflects existing societal biases, the model will likely perpetuate them. For example, a recruitment tool trained on biased data might unfairly favor certain demographics.

Unpredictable behavior: When a model produces an unexpected output, it can be difficult to diagnose the root cause without understanding its internal workings. This can make debugging and iteration a nightmare.

Regulatory issues: In industries like finance and healthcare, regulators are increasingly scrutinizing AI systems. Lack of explainability can lead to compliance issues and legal challenges.

➡️ Things to Watch Out For:

Over-reliance on automated testing: While automated testing is crucial, it may not catch subtle biases or edge cases that require human judgment.

Ignoring model audits: Regular audits of AI models are essential to identify and mitigate biases, but they can be challenging when dealing with black box systems.

Lack of documentation: Poorly documented models make it even harder to understand their behavior and limitations.

👉 The Creativity Bottleneck

While generative AI can be a powerful tool for brainstorming and ideation, over-reliance on it can lead to a homogenization of ideas and a stifling of true innovation. When teams rely too heavily on AI-generated concepts, they risk converging on similar solutions, leading to a lack of differentiation in the market.

➡️ Specific Examples:

Echo chamber effect: If everyone is using the same AI tools to generate ideas, they're likely to end up with similar outputs, leading to a lack of diversity in product concepts.

Loss of human touch: AI may struggle to capture the nuances of human emotion and cultural context, leading to products that feel sterile or out of touch.

Dependence on existing data: Generative AI models are trained on existing data, which means they're inherently backward-looking. This can make it difficult to break new ground and develop truly innovative solutions.

➡️ Don't Do These Mistakes:

Using AI as a crutch for ideation: AI should be used to augment, not replace, human brainstorming sessions.

Ignoring professional intuition: Sometimes, the best ideas come from intuition and experience, not from an algorithm.

Failing to iterate beyond AI outputs: AI-generated concepts should be treated as starting points, not final products.

👉 View Forward: Navigating the Future of AI in Product Development

The key to successfully integrating generative AI into product development lies in striking a balance between leveraging its capabilities and avoiding over-reliance. As we look ahead, here are some crucial considerations:

➡️ Predictions:

Rise of hybrid intelligence: The future will likely involve a collaborative approach where AI and humans work together, each leveraging their unique strengths. This "hybrid intelligence" model will combine the computational power of AI with human creativity, intuition, and ethical judgment.

Focus on explainable AI (XAI): We can expect to see increased research and development in the field of XAI, aiming to make AI decision-making more transparent and understandable. This will be crucial for building trust and ensuring accountability.

Emphasis on human-centered design: As AI becomes more prevalent, the importance of human-centered design principles will only grow. Products will need to be designed with a deep understanding of user needs, values, and ethical considerations.

New roles and skill sets: The rise of AI will create new roles and require new skill sets in product development. Professionals will need to understand how to effectively collaborate with AI, interpret its outputs, and mitigate its risks.

The future of product development is not about AI versus humans but about how we can work together to create innovative, ethical, and impactful products that truly benefit users!

❓Questions Deepdive:

1️⃣ If generative AI models are often "black boxes," how can product teams effectively debug or troubleshoot unexpected outputs without understanding the internal decision-making process?

Employ rigorous A/B testing with diverse datasets to identify patterns and triggers of unexpected behavior.

Develop robust "anomaly detection" systems that flag outputs deviating significantly from established baselines.

Focus on "input-output" analysis, treating the model as a system whose internal workings are unknown but whose responses to specific inputs can be systematically mapped.

2️⃣ How can companies balance the benefits of rapid AI-driven ideation with the risk of creating homogenous, undifferentiated products?

Implement "human-in-the-loop" ideation processes where AI-generated concepts are critically evaluated, refined, and combined with human insights.

Encourage the use of multiple, diverse AI tools to generate a wider range of initial ideas, reducing the "echo chamber" effect.

Establish clear guidelines that prioritize originality and user-centricity over simply adopting the first AI-generated suggestion.

3️⃣ With the rise of Explainable AI (XAI), what specific techniques or tools are emerging to help product teams better understand the decision-making processes of generative models?

Techniques like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) are gaining traction, providing insights into feature importance and model behavior.

Visualization tools are being developed to help teams understand how models process information and arrive at specific outputs.

Platforms that allow for interactive exploration of model behavior, enabling users to probe "what-if" scenarios, are becoming increasingly available.

4️⃣ How might over-reliance on AI-generated ideas impact the skill sets and professional development of product designers and developers in the long run?

There's a risk that over-dependence on AI could lead to a decline in fundamental design and development skills.

Professionals may need to shift towards higher-level strategic thinking, focusing on curating, evaluating, and refining AI-generated outputs rather than solely creating from scratch.

Skills in prompt engineering, AI model selection will become increasingly valuable.

5️⃣ In industries with stringent regulations (e.g., finance, healthcare), how can companies leverage generative AI for product development while ensuring compliance and mitigating the risks associated with "black box" models?

Companies might need to adopt hybrid approaches, using AI for less sensitive tasks while relying on more transparent methods for critical decision-making.

Investing in XAI techniques becomes paramount, enabling teams to provide justifications for AI-driven outputs to regulators.

"Human-in-the-loop" systems, where AI recommendations are reviewed and approved by human experts, can provide a crucial layer of oversight.

6️⃣ Considering the "black box" nature of many AI models, what ethical frameworks or guidelines can product teams adopt to ensure responsible development and deployment, particularly regarding bias and transparency?

Teams can adopt frameworks like the "Ethics Canvas" to systematically evaluate the ethical implications of their AI projects.

Guidelines should prioritize fairness, accountability, and transparency, even when dealing with complex models.

Implementing diverse and inclusive development teams can help mitigate biases, ensuring a wider range of perspectives are considered in the design and implementation process.

7️⃣ How might the increasing use of generative AI in product development reshape the competitive landscape, particularly for startups versus established tech giants?

Startups might leverage AI to level the playing field, rapidly prototyping and iterating to compete with larger, slower-moving incumbents.

Established companies could face challenges adapting their legacy processes and mindsets to fully embrace AI-driven development.

The ability to effectively integrate and manage a diverse ecosystem of specialized AI tools could become a key differentiator, potentially leading to a more fragmented and dynamic market.