The Benefits and Challenges of Deploying AI Models on Cloud Platforms

Deploying AI models on the cloud offers unbeatable scalability and cost-effectiveness, but data security, vendor lock-in, and latency issues can trip you up!

Hey AI enthusiasts! 👋

Let's dive into the world of deploying AI models on the cloud.

This is a hot topic for anyone working with AI, as it significantly impacts how we build, scale, and maintain our applications.

Moving AI deployments to the cloud offers some incredible benefits, but it also comes with its own set of challenges. And costs (oh 😬).

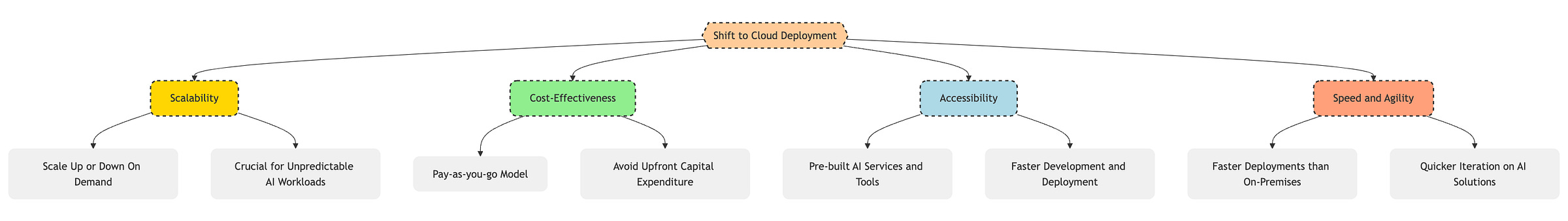

Cloud vs. On-Premises: Why the Shift?

Traditionally, many companies deployed their AI models on their own hardware, also known as on-premises deployment. This gave them full control over their infrastructure and data. However, as AI models became more complex and data-hungry, the limitations of on-premises setups became apparent, requiring costly upfront investments.

➡️ The Allure of the Cloud:

Scalability: This is probably the biggest driver. Cloud platforms allow you to scale your resources up or down on demand. Need more processing power during peak times? No problem. Want to scale down during off-hours to save costs? Easy. This flexibility is crucial for AI workloads, which can be unpredictable.

Cost-Effectiveness: With the cloud, you only pay for what you use. This can be significantly cheaper than investing in and maintaining your own expensive hardware, especially for smaller companies or startups. Plus, you avoid the upfront capital expenditure of buying servers and other equipment.

Accessibility: Cloud platforms offer a wide range of pre-built AI services and tools, like managed machine learning platforms, pre-trained models, and APIs. This makes it easier and faster to develop and deploy AI applications, even if you don't have a large team of AI experts.

Speed and Agility: Cloud deployments are typically faster than on-premises setups. You can spin up new instances in minutes, experiment with different models and configurations quickly, and iterate on your AI solutions at a much faster pace.

➡️ Don't Do This Mistake:

Ignoring the learning curve: While cloud platforms offer many benefits, they also come with a learning curve. Your team will need to learn how to use the specific cloud platform you choose, its services, and its tools.

Overspending on unused resources: The pay-as-you-go model can be a double-edged sword. If you're not careful, you can easily overspend on resources you're not using. It's important to monitor your usage and optimize your resource allocation to avoid unnecessary costs.

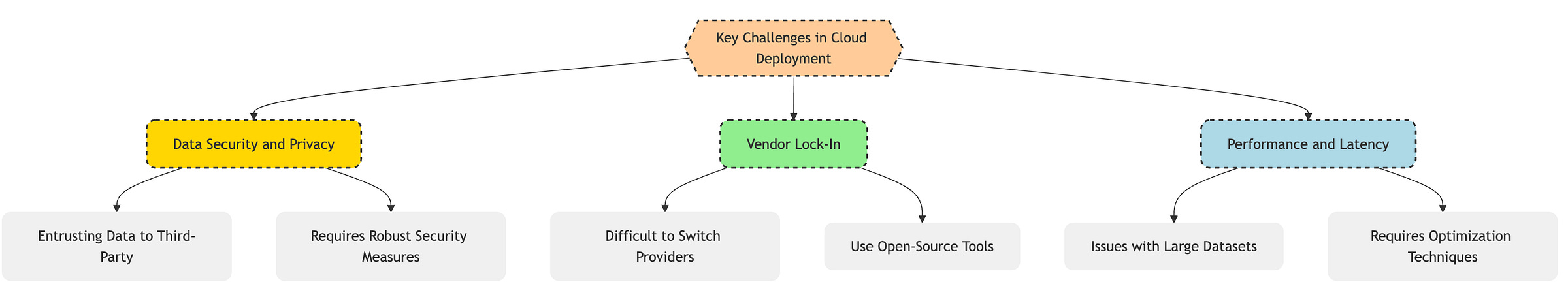

Key Challenges

While the cloud offers many advantages, it's not without its challenges. Deploying AI models on the cloud requires careful planning and consideration. Here are some of the issues you might face:

➡️ Data Security and Privacy:

The Concern: When you move your data to the cloud, you're essentially entrusting it to a third-party provider. This raises concerns about data security and privacy, especially when dealing with sensitive data.

Example: A fintech company deploying a fraud detection model on the cloud needs to ensure that its customer data is protected from unauthorized access and breaches.

Solutions: Cloud providers offer various security features, such as encryption, access controls, and compliance certifications. It's crucial to choose a reputable provider with a strong security track record and to implement robust security measures on your end as well.

Common Misconception: Some believe that on-premises deployments are inherently more secure than cloud deployments. However, this is not always the case. Cloud providers often have more resources and expertise to invest in security than individual companies.

➡️ Vendor Lock-In:

The Risk: Once you commit to a specific cloud provider, it can be difficult and costly to switch to another provider later on. This is known as vendor lock-in.

Example: If you build your AI application using a specific cloud provider's proprietary services and tools, it may be challenging to migrate your application to another platform without significant rework.

Mitigation Strategies: Consider using open-source tools and technologies that are cloud-agnostic. This will give you more flexibility to move your applications between different cloud providers or even back to on-premises if needed. You can also adopt a multi-cloud strategy, using services from multiple cloud providers to reduce your reliance on a single vendor.

Common Mistake: Many companies underestimate the effort and cost involved in migrating from one cloud provider to another. It's important to factor this into your decision-making process from the outset.

➡️ Performance and Latency:

The Issue: Cloud deployments can sometimes suffer from performance issues and latency, especially when dealing with large datasets or complex models. This can be a problem for real-time AI applications that require low latency responses.

Example: An autonomous driving system deployed on the cloud needs to process data from multiple sensors and make decisions in real time. Any latency in the system could have serious consequences.

Optimization Techniques: Cloud providers offer various tools and services to optimize performance, such as content delivery networks (CDNs), caching, and load balancing. It's also important to choose the right instance types and configurations for your specific workloads.

Thing to Watch Out For: Network latency can be a major bottleneck for cloud deployments. It's important to choose a cloud region that is geographically close to your users to minimize latency.

View Forward

The trend towards cloud-based AI deployments is only going to accelerate. As AI models become more complex and data-intensive, the scalability, cost-effectiveness, and accessibility of the cloud will become even more critical.

➡️ Predictions:

Hybrid Cloud Adoption: We'll likely see more companies adopting a hybrid cloud approach, combining the benefits of both on-premises and cloud deployments. This will allow them to keep sensitive data on-premises while leveraging the scalability and flexibility of the cloud for other workloads.

Serverless AI: Serverless computing is gaining traction in the AI space. This approach allows you to run your AI models without having to manage any servers, further reducing operational overhead and costs.

Edge AI: As the Internet of Things (IoT) continues to grow, we'll see more AI processing happening at the edge, closer to the data source. This will reduce latency and bandwidth requirements, enabling real-time AI applications in areas like autonomous driving, smart homes, and industrial automation.

Enhanced Security Measures: Cloud providers will continue to invest in security features and compliance certifications to address the growing concerns around data security and privacy in the cloud.

AI-Specific Cloud Services: We can expect to see more cloud services specifically designed for AI workloads, such as specialized hardware, optimized machine learning platforms, and pre-trained models for specific industries and use cases.

The cloud is revolutionizing the way we develop and deploy AI. Keep exploring, keep learning, and stay ahead in this rapidly evolving field.