Stop Making These 7 Mistakes in AI Security

Are you unknowingly building vulnerabilities into your AI systems? Here are 7 common security mistakes you might be making and how to fix them.

Hey there, AI product builders! 👋

You're crafting the future, but are you also unknowingly building in vulnerabilities?

In the rush to innovate and deploy, it's easy to overlook some crucial aspects of AI security.

Let's be real, a data breach or a compromised model can set you back big time, damaging your reputation and user trust.

Today, I'm talking directly to Data scientists and machine learning engineers.

You're the backbone of AI development, and security needs to be baked into your workflow from day one.

Let's dive into seven common security mistakes you might be making and, more importantly, how to fix them.

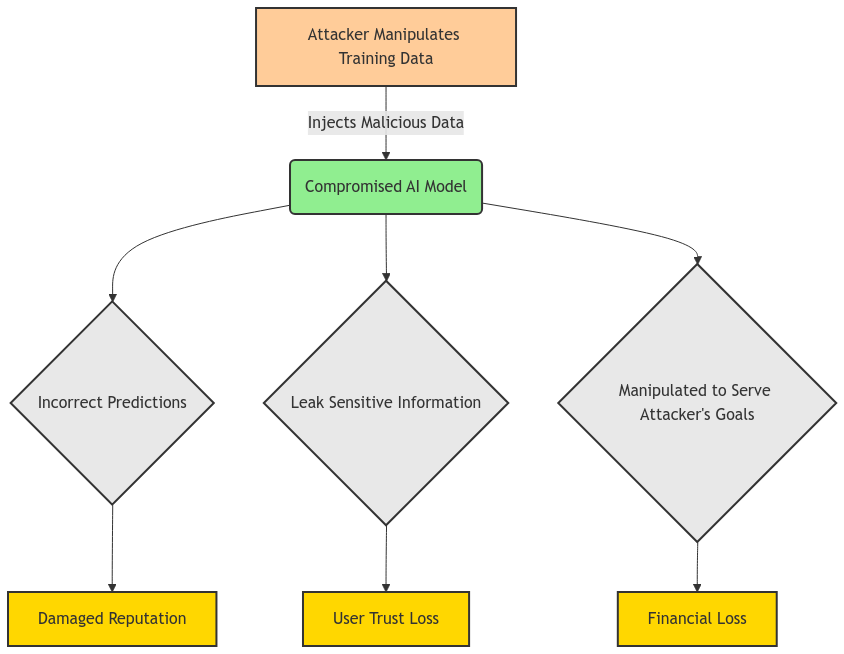

Ignoring Data Poisoning: Your AI's Achilles' Heel

What it is: Data poisoning is when attackers manipulate your training data to control your model's behavior. Think of it like slipping a little bit of bad code into your system—it might not be noticeable at first, but it can have devastating consequences.

Why it's a big deal: A poisoned model can make wrong predictions, leak sensitive information, or even be manipulated to serve an attacker's goals.

The Fix:

Data Sanitization is Key: Implement rigorous data validation checks before training. This includes looking for outliers, inconsistencies, and anything that seems out of place.

Source Vetting: Only use data from trusted sources. If you're using third-party datasets, make sure they come from reputable providers with strong security practices.

Monitor Model Behavior: Regularly test your model's performance on a clean dataset to detect any unexpected behavior that could signal poisoning.

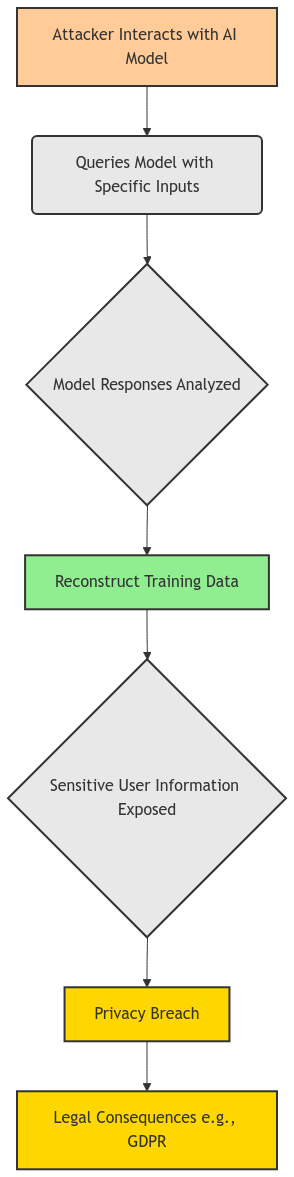

Neglecting Model Inversion Attacks: Protecting User Privacy

What it is: Model inversion attacks try to reconstruct the original training data from the model itself. Imagine someone being able to figure out your users' personal information just by interacting with your AI—scary, right?

Why it's crucial for you: Your models might be trained on sensitive user data. Protecting this data is not just good practice; it's often a legal requirement (think GDPR).

The Fix:

Differential Privacy: This technique adds noise to your data during training, making it harder to reconstruct the original data while still allowing the model to learn effectively.

Limit Model Access: Control who can interact with your model and how. Implement strong authentication and authorization protocols.

Use Federated Learning: Federated learning allows you to train models on decentralized data without the data ever leaving the user's device.

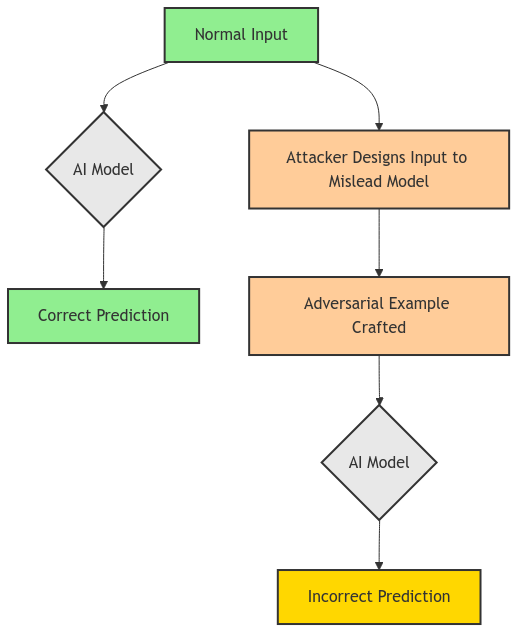

Overlooking Adversarial Examples: Fooling Your AI

What it is: Adversarial examples are inputs designed to trick your model into making incorrect predictions. It's like an optical illusion for AI.

Why you should care: Attackers can use adversarial examples to bypass security systems, manipulate recommendations, or even cause your model to misclassify objects in critical applications.

The Fix:

Adversarial Training: Train your model on both normal and adversarial examples. This helps it learn to recognize and resist these tricky inputs.

Input Validation: Implement checks to detect and reject inputs that seem designed to mislead the model.

Ensemble Methods: Use multiple models and combine their predictions. This makes it harder for an attacker to fool all the models simultaneously.

Forgetting About Model Explainability: Trust Through Transparency

What it is: Explainable AI (XAI) is about making your model's decisions understandable to humans. It's like having a conversation with your AI to understand why it made a particular choice.

Why it matters for security: Understanding how your model works is essential for identifying potential vulnerabilities and biases. It also builds trust with users, especially in sensitive applications.

The Fix:

Use Interpretable Models: When possible, choose models that are inherently more transparent, like decision trees or linear models.

Employ XAI Techniques: Use tools like LIME and SHAP to visualize and explain your model's predictions.

Document Your Model: Keep detailed records of your model's architecture, training data, and decision-making process.

Skipping Regular Model Audits: Staying Ahead of Threats

What it is: Model auditing is like giving your AI a regular health check-up. It involves systematically reviewing your model's performance, security, and fairness.

Why it's non-negotiable: The AI landscape is constantly evolving. New threats emerge, and your model's performance can drift over time. Regular audits help you stay ahead of the curve.

The Fix:

Establish an Audit Schedule: Conduct audits at regular intervals, such as quarterly or annually.

Use Automated Tools: Leverage tools that can automatically test your model for vulnerabilities, biases, and performance issues.

Involve Third-Party Experts: Consider bringing in external auditors to provide an unbiased perspective on your model's security.

Underestimating Insider Threats: Protecting from Within

What it is: Insider threats come from people within your organization who have access to your AI systems. This could be intentional sabotage or accidental data leaks.

Why it's a real concern: Insiders often have legitimate access to sensitive data and systems, making them a significant security risk.

The Fix:

Implement Least Privilege Access: Only give employees access to the data and systems they absolutely need to do their jobs.

Monitor User Activity: Track user activity on your AI systems to detect any suspicious behavior.

Security Awareness Training: Educate your team about the importance of AI security and how to identify and report potential threats.

Disregarding Secure Development Practices: Building Security In

What it is: Secure development practices are about integrating security into every stage of the AI development lifecycle. It's like building a house with a strong foundation.

Why it's your responsibility: You're building AI products that could impact millions of lives. Security needs to be a top priority, not an afterthought.

The Fix:

Threat Modeling: Identify potential security threats early in the development process and design your system to mitigate them.

Code Reviews: Have other developers review your code for security vulnerabilities before deploying it.

Use Secure Libraries and Frameworks: Leverage tools and libraries that have built-in security features.

Actionable Tips for Data Scientists and Machine Learning Engineers:

Prioritize Data Security: Treat data as your most valuable asset. Implement robust data sanitization, validation, and access control measures.

Embrace Explainable AI: Make your models transparent and understandable. Use XAI techniques and document your model's decision-making process.

Conduct Regular Audits: Make model auditing a routine practice. Use automated tools and consider third-party expertise.

Stay Updated: The AI security landscape is constantly changing. Keep learning about new threats and best practices.

Champion Security: Advocate for a security-first culture within your team and organization.

Let's make the future of AI secure, together! 💪