Scaling AI Solutions for Production Environments

Struggling to scale your AI model? This guide offers solutions for data, computation, latency, and costs, with strategies for optimization and cloud use. 🚀

Let's be honest, building a cool AI model in a sandbox environment is one thing. But deploying and scaling it to handle real-world demands is a whole different game.

You've poured your heart and soul into developing a groundbreaking AI solution. Now comes the real test: scaling it for production. It's a challenge that can make or break your project.

This guide dives deep into the critical aspects of scaling AI solutions, helping you navigate the issues. We'll explore strategies, tools, and best practices to ensure your AI can handle the difficulties of a production environment.

Understanding the Scaling Challenge

Scaling an AI solution isn't just about making it bigger. ❌ It's about ensuring it can handle increased data volumes, user traffic, and complex computations while maintaining performance, reliability, and cost-effectiveness.

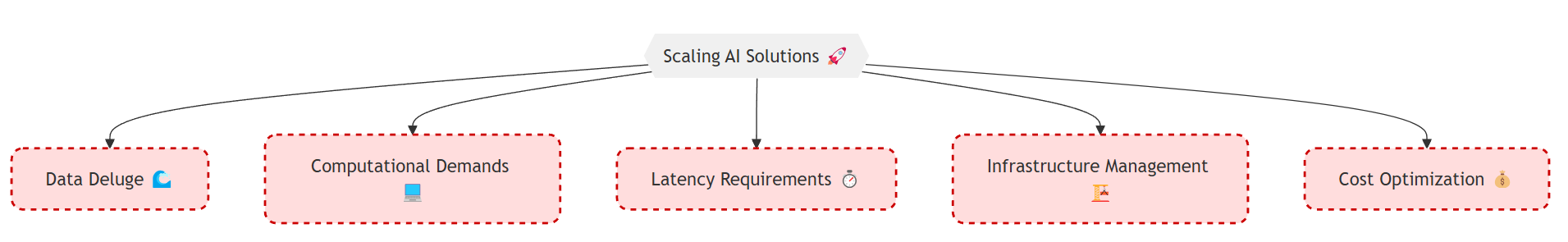

Key Challenges:

Data Deluge: Production environments often involve massive datasets that can overwhelm unprepared systems.

Computational Demands: AI models, especially deep learning ones, can be computationally intensive, requiring significant processing power.

Latency Requirements: Real-time applications demand quick responses, putting pressure on your AI's inference speed.

Infrastructure Management: Scaling often involves managing complex infrastructure, including servers, databases, and networks.

Cost Optimization: Scaling can lead to increased costs if not managed carefully.

Key Strategies for Scaling AI Solutions

1. Optimize Your AI Model: Efficiency is Key 🎯

Before you even think about scaling your infrastructure, take a hard look at your AI model itself. Is it as efficient as it could be? A well-optimized model can significantly reduce computational overhead and improve performance.

AI Model Optimization Techniques

How It Works:

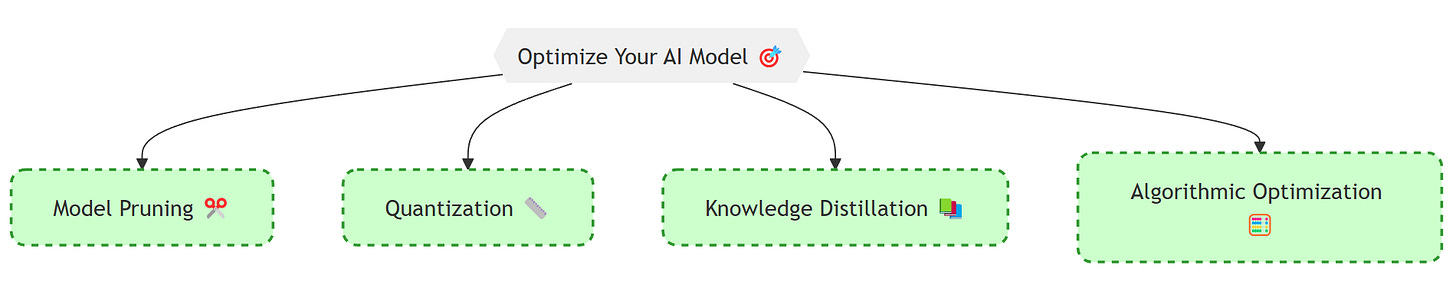

Model Pruning

Remove redundant connections or neurons in your neural network without significantly impacting accuracy. This reduces model size and improves efficiency.

Quantization

Reduce the precision of your model's weights and activations (e.g., from 32-bit to 8-bit) to decrease memory footprint and speed up computations.

Knowledge Distillation

Train a smaller "student" model to mimic the behavior of a larger, more complex "teacher" model, retaining high accuracy with reduced complexity.

Algorithmic Optimization

Explore different algorithms or model architectures that are inherently more efficient for your specific task.

Examples:

Efficient Architectures

MobileNet and EfficientNet: Families of neural network architectures designed for efficiency on mobile and embedded devices.

Optimization Tools

TensorRT and TensorFlow Lite: Tools that optimize and quantize models for deployment on various platforms.

Advancements in Model Optimization:

Recent advancements have enhanced these techniques, often integrating them for superior efficiency. Here are key innovations:

Combined Approaches

Integrating pruning, quantization, and knowledge distillation has proven highly effective. For example, the PQK method combines all three to create lightweight, power-efficient models suitable for edge devices.

Read more on arXiv

Pruning During Distillation (PDD)

Innovations like Pruning during Distillation (PDD) simultaneously prune networks and apply knowledge distillation, optimizing student models without pre-trained teachers.

Read more on Springer Link

Reinforcement Learning for Pruning

Using reinforcement learning to automate pruning decisions has led to more efficient compression, as demonstrated in recent studies.

Read more on MDPI

Structured Pruning with Attention Mechanisms

Incorporating attention mechanisms during pruning helps identify and retain critical features, enhancing model performance post-compression.

Read more on MDPI

Practical Tools and Applications

Tools like TensorRT and TensorFlow Lite continue to support these optimization techniques, facilitating deployment across various platforms. Leveraging these advancements effectively requires staying updated with the latest research and tools.

Common Mistakes to Avoid:

Over-Optimization: Aggressive optimization can sometimes lead to a significant drop in accuracy. Always validate your optimized model's performance.

Ignoring Hardware Constraints: Consider the target hardware's limitations when optimizing. An optimization that works well on a GPU might not be suitable for a CPU.

Lack of Profiling: Don't optimize blindly. Use profiling tools to identify performance bottlenecks before and after optimization.

Things to Watch Out For:

Accuracy Trade-offs: Ensure that any optimization doesn't degrade your model's accuracy below acceptable levels.

Retraining Requirements: Some optimization techniques, like pruning, might require retraining your model.

Compatibility Issues: Verify that your optimized model is compatible with your deployment environment.

2. Scale Your Infrastructure: Building a Solid Foundation 🏗️

Once your model is optimized, it's time to scale your infrastructure. This involves choosing the right hardware, software, and architecture to handle the increased workload.

How it Works:

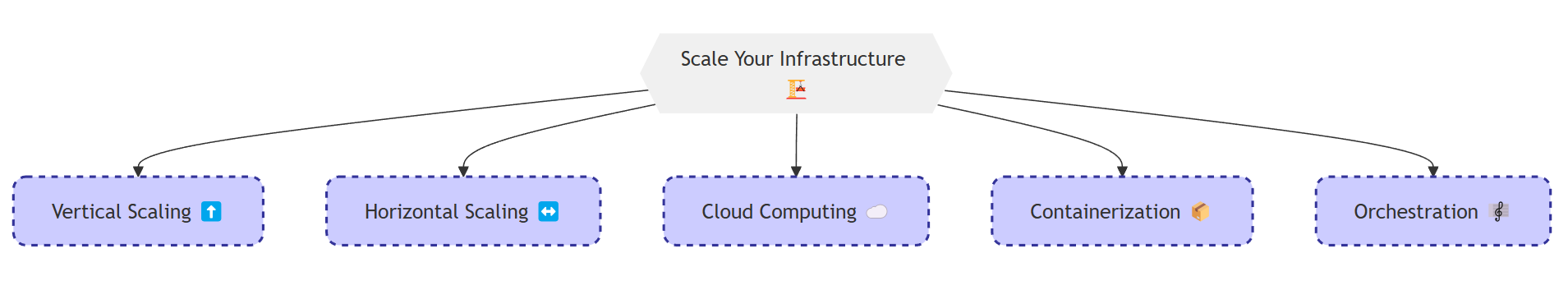

Vertical Scaling: Upgrade your existing servers with more powerful CPUs, GPUs, or more RAM.

Horizontal Scaling: Add more servers to your cluster to distribute the workload.

Cloud Computing: Leverage cloud platforms like AWS, Google Cloud, or Azure to dynamically scale your resources based on demand.

Containerization: Package your AI application and its dependencies into containers (e.g., using Docker) for easy deployment and scaling.

Orchestration: Use tools like Kubernetes to manage and automate the deployment, scaling, and management of your containerized applications.

Examples:

AWS SageMaker: A fully managed service for building, training, and deploying machine learning models.

Google Cloud AI Platform: A suite of services for developing and deploying AI solutions on Google Cloud.

Azure Machine Learning: A cloud-based environment for training, deploying, automating, and managing machine learning models.

Common Mistakes to Avoid:

Over-Provisioning: Allocating more resources than needed can lead to unnecessary costs.

Under-Provisioning: Insufficient resources can lead to performance bottlenecks and slow response times.

Ignoring Network Bandwidth: Data transfer between servers can become a bottleneck if not considered.

Things to Watch Out For:

Cost Management: Monitor your infrastructure costs and optimize resource utilization.

Security: Implement appropriate security measures to protect your data and infrastructure.

Monitoring and Logging: Use monitoring tools to track your system's performance and identify potential issues. It is crucial!

3. Optimize Data Pipelines: Feeding the Beast Efficiently 🧮

Your AI model is only as good as the data it receives. A well-designed data pipeline ensures that data is efficiently collected, processed, and fed to your model.

How it Works:

Data Preprocessing: Clean, transform, and normalize your data before feeding it to your model.

Batching: Group data into batches to reduce the number of individual requests to your model.

Caching: Store frequently accessed data in a cache to reduce latency.

Asynchronous Processing: Use asynchronous processing to handle data ingestion and preprocessing in the background, without blocking your main application.

Examples:

Apache Kafka: A distributed streaming platform for handling real-time data feeds.

Apache Spark: A unified analytics engine for large-scale data processing.

TensorFlow Data Services (TFDS): Provides a collection of ready-to-use datasets and tools for building efficient input pipelines.

Common Mistakes to Avoid:

Inefficient Data Formats: Using inefficient data formats can slow down data loading and processing.

Lack of Parallelism: Not taking advantage of parallelism in data processing can lead to bottlenecks.

Ignoring Data Locality: Transferring large amounts of data over the network can be slow. Try to process data close to where it's stored.

Things to Watch Out For:

Data Consistency: Ensure that your data pipeline maintains data consistency and integrity.

Error Handling: Implement robust error handling to deal with data quality issues or pipeline failures.

Scalability: Design your data pipeline to handle increasing data volumes.

Advices and Tips for Scaling AI Solutions

Start with a Thorough Assessment: Before embarking on any scaling efforts, conduct a detailed analysis of your current system's performance, resource utilization, and bottlenecks. This will help you identify areas that need optimization and choose the right scaling strategies.

Automate Everything: Automation is your best friend when it comes to scaling. Automate your model training, deployment, testing, and monitoring processes to reduce manual effort and improve efficiency.

Monitor and Optimize Continuously: Scaling is not a one-time event. Continuously monitor your system's performance, identify new bottlenecks, and optimize your model, infrastructure, and data pipelines as needed.

Intoduce DevOps Practices: Adopt DevOps principles to foster collaboration between your development, operations, and data science teams. This will help streamline your AI development and deployment lifecycle.

Consider Serverless Architectures: Serverless computing can be a cost-effective way to scale AI applications, especially for workloads with fluctuating demands. You only pay for the compute resources you consume, and the platform automatically handles scaling.

Use Load Balancing: Distribute incoming traffic across multiple instances of your AI application to prevent overload and ensure high availability. Load balancers can intelligently route requests based on server load, health, and other factors.

Implement Caching Strategies: Caching can significantly improve the performance of your AI application by storing frequently accessed data or model predictions closer to the user or application.

With careful planning and execution, you can transform your AI prototype into a robust, scalable solution that delivers real business value.

❓Questions Deepdive:

1️⃣ How can companies quantify the ROI of implementing these scaling strategies, especially considering the upfront investment in infrastructure and optimization?

Direct Cost Savings: Calculate reductions in operational costs due to efficiencies gained from optimized models and infrastructure. This includes lower compute costs, reduced energy consumption, and decreased need for manual intervention.

Performance Improvements: Measure improvements in key performance indicators (KPIs) like inference speed, model accuracy, and system uptime. Translate these into business value, such as increased customer satisfaction or higher conversion rates.

Opportunity Cost Analysis: Evaluate the potential revenue or savings lost by not scaling. This could involve estimating the impact of delayed product launches, missed market opportunities, or inability to handle increased demand.

2️⃣ Beyond the technical aspects, what organizational changes are necessary to successfully transition an AI project from pilot to production?

Cross-Functional Collaboration: Establish dedicated teams comprising data scientists, ML engineers, DevOps, and product managers to ensure seamless integration and deployment of AI solutions.

Upskilling and Reskilling: Invest in training programs to equip existing staff with the necessary skills to work with and maintain scaled AI systems. This might involve new programming languages, cloud technologies, or MLOps practices.

Change Management: Implement robust change management processes to address potential resistance to adoption and ensure smooth transition to AI-driven workflows. Communicate the benefits of AI clearly and provide ongoing support to users.

3️⃣ What are some emerging technologies or trends that are likely to impact the future of scaling AI solutions?

Edge Computing: Processing data closer to the source (e.g., on IoT devices) rather than in centralized data centers. This reduces latency, bandwidth requirements, and improves real-time performance, fundamentally changing how we scale AI.

Federated Learning: Training models collaboratively across multiple decentralized devices or servers holding local data samples, without exchanging them. This enhances privacy, reduces data transfer needs, and enables scaling to massive datasets.

Neuromorphic Computing: Designing hardware that mimics the structure and function of the human brain, potentially offering unprecedented energy efficiency and computational power for AI workloads.

4️⃣ How can smaller companies or startups with limited resources effectively compete with larger players when it comes to scaling AI?

Cloud-Based Solutions: Leverage cloud platforms (AWS, Google Cloud, Azure) to access scalable infrastructure on a pay-as-you-go basis, avoiding large upfront investments.

Open Source Tools: Utilize powerful open-source libraries (TensorFlow, PyTorch) and frameworks to reduce development costs and benefit from community support.

Focus on Niche Applications: Concentrate on specific, well-defined problems where AI can deliver high value, rather than trying to compete on breadth with larger companies.

Serverless Architectures: Consider serverless computing to automatically scale applications based on demand, optimizing resource utilization and minimizing operational overhead.

5️⃣ In the context of global data privacy regulations, what are the key considerations for scaling AI solutions that handle sensitive user information?

Data Minimization: Collect and process only the minimum necessary data required for the AI application's functionality. Implement data anonymization and pseudonymization techniques where possible.

Secure Data Storage and Transfer: Employ encryption and robust access controls to protect data at rest and in transit. Comply with relevant data security standards (e.g., ISO 27001).

Privacy-Preserving AI Techniques: Explore methods like federated learning, differential privacy, and homomorphic encryption to train models without compromising individual privacy.

Transparency and User Control: Provide clear information to users about how their data is being used and obtain explicit consent for data processing. Offer mechanisms for users to access, modify, and delete their data.

6️⃣ How can AI models be designed from the outset to be more inherently scalable and adaptable to future growth?

Modular Design: Decompose complex models into smaller, independent modules that can be scaled and updated individually. This simplifies maintenance, allows for parallel development, and enhances adaptability.

Parameterized Complexity: Design models with adjustable parameters that control their complexity (e.g., number of layers, units). This allows for easy scaling up or down based on available resources and performance requirements.

Use of Transfer Learning: Leverage pre-trained models as a starting point, fine-tuning them for specific tasks. This reduces training time and data requirements, making scaling more efficient.

Standardized Interfaces: Design models with well-defined input and output interfaces that adhere to industry standards. This facilitates interoperability with different systems and simplifies integration into larger pipelines.

7️⃣ What ethical considerations should be taken into account when scaling AI, particularly concerning potential biases and societal impact?

Bias Detection and Mitigation: Implement rigorous testing procedures to identify and mitigate biases in training data and model outputs. Use fairness-aware algorithms and techniques to ensure equitable outcomes across different demographic groups.

Transparency and Explainability: Design AI systems that can provide clear explanations for their decisions, especially in high-stakes applications. This fosters trust and accountability, and facilitates the identification of potential issues.

Societal Impact Assessment: Evaluate the broader societal implications of scaled AI deployments, considering potential impacts on employment, access to resources, and social equity. Engage with stakeholders and experts to develop responsible deployment strategies.

Continuous Monitoring: Regularly audit scaled AI systems for ethical compliance, bias, and unintended consequences. Establish feedback mechanisms to address concerns and adapt to evolving ethical standards.