Must See AI Innovations This Week - 2 October 2024

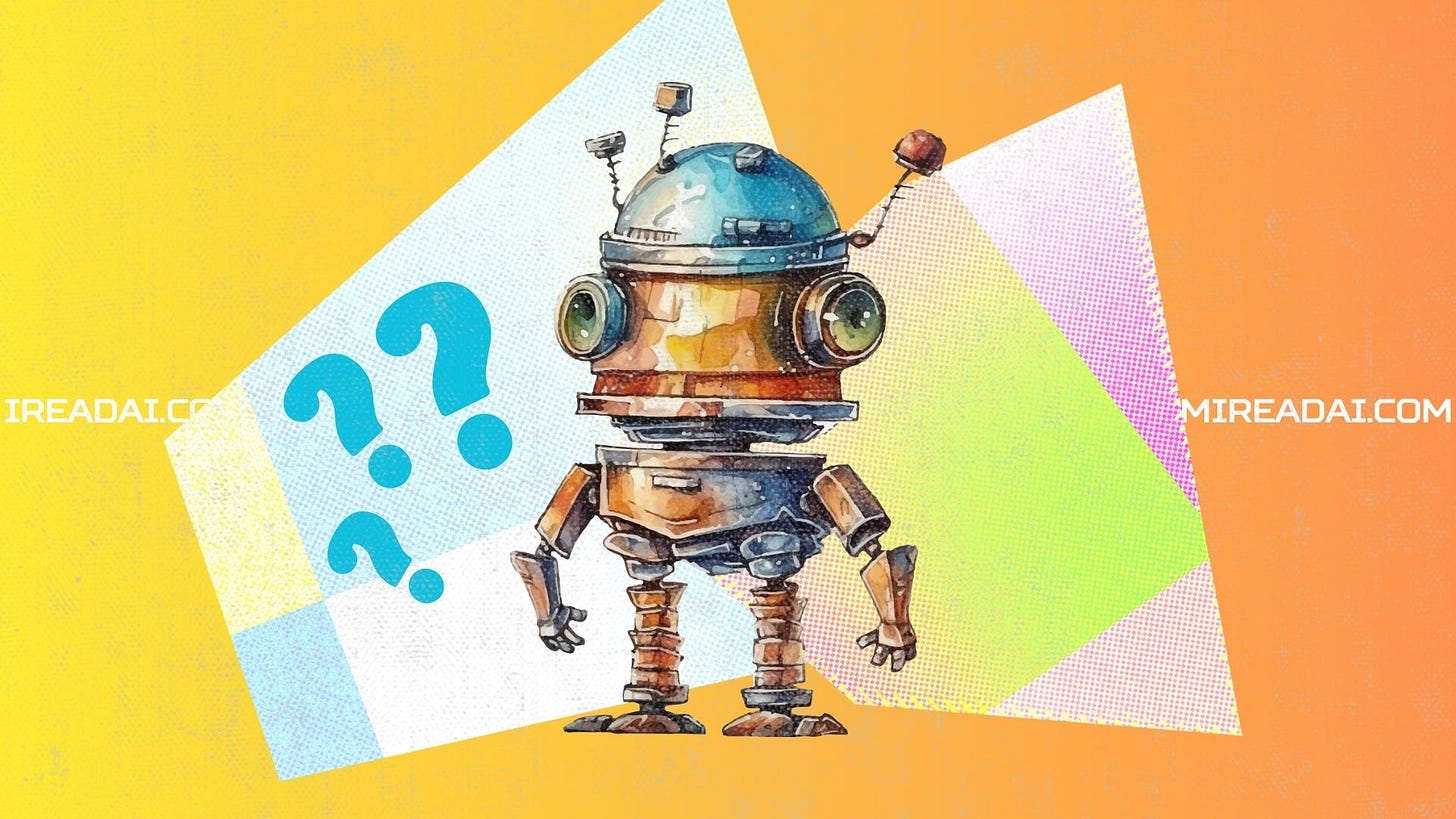

Google DeepMind's AlphaChip uses AI to design computer chips, achieving in hours what took humans months. This is already powering Google's TPUs, and its release can accelerate AI chip design.

Research Highlights

Google DeepMind's AlphaChip: AlphaChip leverages an "edge-based" graph neural network that learns relationships between chip components, generalizing across designs to optimize layouts within hours, instead of months. Its implementation in Google's latest TPUs has resulted in performance improvements and faster design cycles. The business implications of this breakthrough include faster innovation in sectors reliant on advanced computing and potentially reduced production costs, as quicker design cycles translate to cost efficiency in chip manufacturing.

Meta's Llama 3.2: Llama 3.2 expands the multimodal capabilities of the Llama family, introducing models that process both images and text, including lightweight versions optimized for mobile and edge devices. These models employ pruning and knowledge distillation techniques for efficiency, making them ideal for content creation and on-device AI solutions with a focus on privacy. From a business perspective, Llama 3.2’s versatility and efficiency offer opportunities to streamline operations, reduce infrastructure costs, and expand AI applications in various sectors, including mobile computing.

Capabilities Breakthroughs

Google NotebookLM Analyzes YouTube: Google’s NotebookLM tool now leverages multimodal capabilities from Gemini 1.5 to summarize YouTube videos by extracting insights from transcripts. This enhancement allows businesses to efficiently process large volumes of video content for market research, content analysis, and educational purposes. The ability to quickly extract and analyze video data opens new avenues for improving decision-making based on multimedia sources.

AI Masters reCAPTCHA with 100% Accuracy: Researchers from ETH Zurich developed an AI capable of solving Google's reCAPTCHA v2 with perfect accuracy, which poses a challenge to traditional security measures. This breakthrough, while highlighting the sophistication of AI, necessitates businesses to adopt more robust authentication methods to stay ahead of increasingly capable AI systems, signaling the need for continuous upgrades in online security.

Liquid AI Introduces Liquid Foundation Models (LFMs): Liquid AI’s LFMs challenge traditional transformer architectures with reduced memory footprint and enhanced inference efficiency. Using structured operators and adaptive linear units, LFMs outperform transformers in benchmarks like MMLU-Pro and Hellaswag, excelling in memory efficiency, particularly with long inputs. Businesses looking for high-performance AI models for memory-intensive and context-aware tasks can leverage LFMs to reduce hardware costs while maintaining or improving performance.

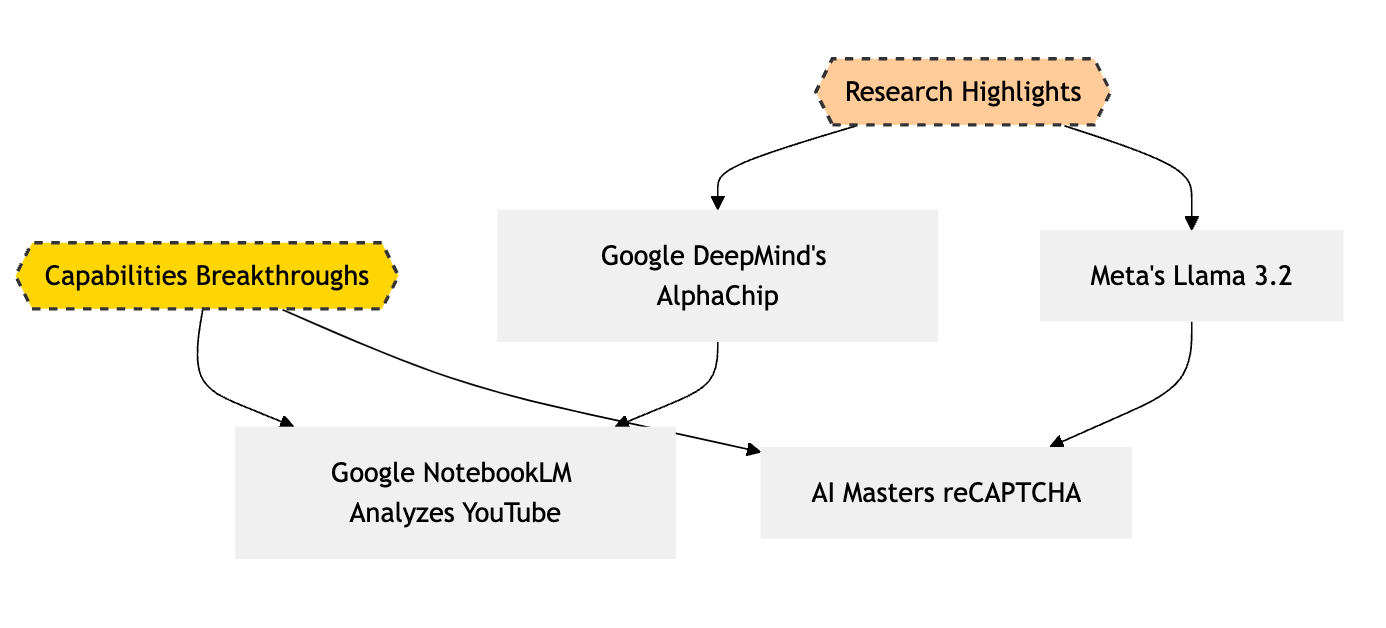

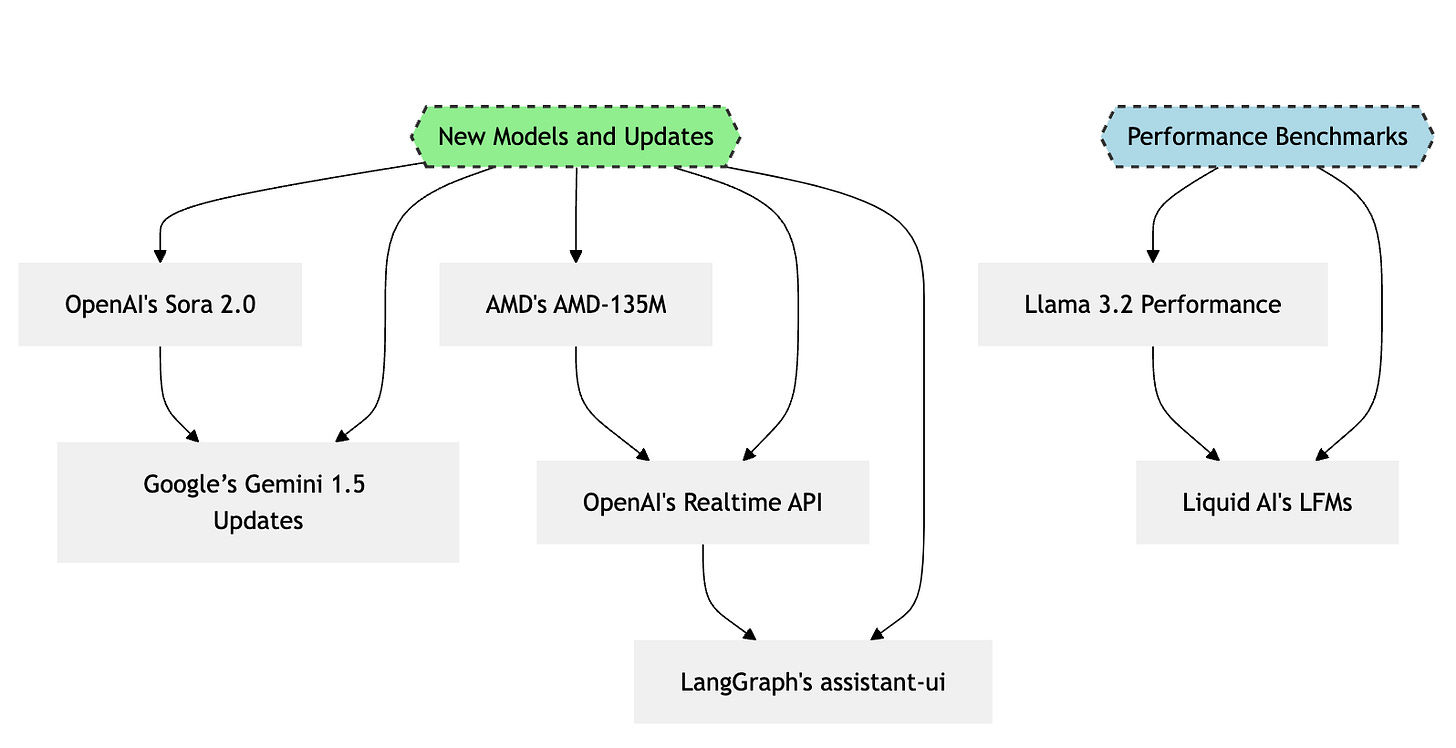

New Models and Updates

OpenAI's Sora 2.0: Sora 2.0 aims to significantly speed up video generation with higher-quality outputs, utilizing advanced techniques to extend the length and quality of video clips compared to its predecessor. For businesses, Sora 2.0 promises to cut down production time and costs in video creation, democratizing access to high-quality content creation and enabling more efficient media workflows.

Google’s Gemini 1.5 Updates: The updated Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002 models deliver enhanced performance in math, code generation, and multimodal tasks, offering up to 20% improvement in math benchmarks with faster output speeds. This iteration’s cost reduction and increased accessibility make Gemini 1.5 a highly viable solution for businesses looking to integrate AI into their operations efficiently, with strong ROI potential.

AMD Releases Its First Small Language Model, AMD-135M: AMD’s AMD-135M language model is optimized for AMD GPUs, offering strong performance in text generation and comprehension tasks. Based on LLaMA2 architecture, this model is trained on 690B tokens and open-sourced, making it a compelling choice for businesses seeking efficient, hardware-optimized solutions for language tasks, particularly when operating on AMD hardware.

OpenAI's Realtime API for Speech-to-Speech Applications: OpenAI’s new Realtime API supports continuous audio streaming, real-time function calling, and human-like voice interactions. This enables businesses to develop more natural voice-based applications in customer service, education, and other areas, improving user engagement and reducing latency in voice interactions.

LangGraph's assistant-ui Enhances AI Application Development: LangGraph’s assistant-ui offers a React-based chat interface that integrates seamlessly with LangGraph Cloud, optimized for streaming responses and human-in-the-loop approval. It supports multimodal interactions, making it easier for businesses to build intuitive AI applications with rich user interfaces, reducing development time and improving the customer experience for AI-driven solutions.

Performance Benchmarks

Llama 3.2 vs. Competition: Llama 3.2 models, including the 11B and 90B versions, show superior performance in multimodal tasks, surpassing competitors like Claude 3 Haiku and GPT-4o mini. The 3B model outperforms similarly sized models like Gemma 2 2.6B and Phi 3.5-mini in summarization and instruction-following tasks. For businesses, this level of performance across multiple model sizes means more options for scalable, cost-efficient AI applications, from content generation to task automation.

Liquid AI's LFMs vs. Competitors: Liquid AI's LFMs achieve top scores across various benchmarks, with the LFM-1B model scoring 58.55 on the MMLU benchmark and the LFM-3B scoring 66.16. The 40B MoE model achieves a 78.76 MMLU score, rivaling much larger models. Businesses can leverage the efficiency and scalability of LFMs for complex tasks requiring long-context processing, making them an appealing alternative to traditional transformer models.