Learn How to Streamline Your AI Development Workflows

We'll cover centralized data management, AutoML, and collaborative platforms to transform your workflow from chaos to a well-oiled machine.

Developing AI can feel daunting 🙈. You've got to deal with data, model training, testing, deployment - it's a 😱! But what if I told you there's a way to streamline these workflows, making your AI development journey smoother and more efficient?

This guide will dive into practical strategies to optimize your AI development process. We'll explore how to cut through the noise, automate, and focus on what truly matters: building cutting-edge AI solutions.

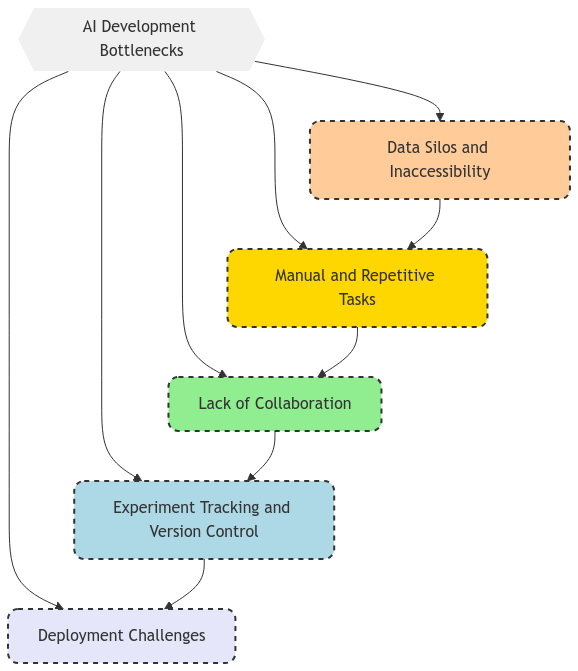

Understanding the Bottlenecks in AI Development

Before we jump into solutions, let's identify the common pain points that slow down AI development:

Data Silos and Inaccessibility: Data scattered across different systems, formats, and teams, making it hard to access and prepare.

Manual and Repetitive Tasks: Spending hours on tasks like data cleaning, labeling, and model tuning, draining time and resources.

Lack of Collaboration: Teams working in isolation, leading to duplicated efforts, inconsistencies, and integration challenges.

Experiment Tracking and Version Control: Difficulty managing experiments, tracking results, and reverting to previous model versions.

Deployment Challenges: Complex and lengthy deployment processes, hindering the transition from development to production.

Key Strategies to Streamline Your AI Development Workflow

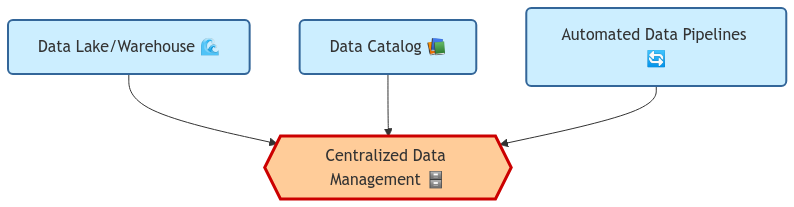

1. Centralized Data Management: Your Single Source of Truth 🗄️

Data is the lifeblood of AI 🩸. But when it's scattered across different systems and formats, it becomes a major bottleneck. Centralizing your data management is a game-changer.

How it Works:

Data Lake/Warehouse: Create a central repository to store all your data, structured and unstructured.

Data Catalog: Implement a data catalog to make datasets easily discoverable, understandable, and accessible.

Automated Data Pipelines: Build pipelines to ingest, clean, transform, and load data into your central repository automatically.

Examples:

AWS Lake Formation: Simplifies setting up a secure data lake on AWS.

Google Cloud Dataprep: A visual tool for exploring, cleaning, and preparing data for analysis and machine learning.

Azure Synapse Analytics: An integrated platform for data warehousing, big data analytics, and machine learning.

Common Mistakes to Avoid:

Ignoring Data Governance: Failing to establish clear data ownership, access controls, and quality standards.

Overlooking Data Security: Neglecting to implement proper security measures to protect sensitive data.

Lack of Metadata Management: Not tagging or documenting datasets properly, making them difficult to understand and use.

Things to Watch Out For:

Data Quality: Ensure data accuracy, completeness, and consistency through automated validation checks.

Scalability: Choose a data storage and management solution that can scale with your growing data needs.

Integration: Ensure your data platform integrates seamlessly with your other AI development tools.

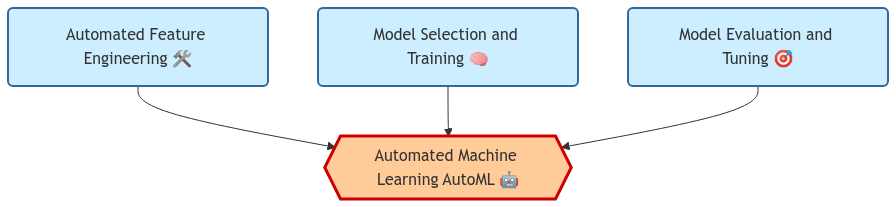

2. Automated Machine Learning (AutoML): Your AI Assistant 🤖

AutoML is like having a tireless AI assistant that handles many of the tedious and time-consuming tasks in the machine learning pipeline.

How it Works:

Automated Feature Engineering: AutoML tools automatically generate and select relevant features from your data.

Model Selection and Training: These tools automatically try different algorithms and hyperparameters, finding the best-performing model.

Model Evaluation and Tuning: AutoML platforms evaluate models using appropriate metrics and automatically fine-tune them for optimal performance.

Examples:

Google Cloud AutoML: Allows you to train custom machine learning models with minimal coding.

H2O.ai: An open-source platform for distributed machine learning, including AutoML capabilities.

DataRobot: An enterprise AI platform that automates the end-to-end machine learning process.

Common Mistakes to Avoid:

Blindly Trusting AutoML: While AutoML is powerful, it's not a magic bullet. Always validate the results and understand the underlying models.

Ignoring Domain Expertise: AutoML should complement, not replace, your domain knowledge and expertise.

Overfitting: Ensure your AutoML solution has built-in mechanisms to prevent overfitting, like cross-validation and regularization.

Things to Watch Out For:

Explainability: Choose AutoML tools that provide insights into how models were built and how they make predictions.

Customization: Ensure you can customize the AutoML process to fit your specific needs and constraints.

Cost: AutoML services can be expensive, especially for large datasets and complex models.

3. Collaborative AI Platforms: Breaking Down Silos 🤝

AI development is a team sport. 🏈 Collaborative platforms bring teams together, fostering communication, knowledge sharing, and efficient workflows.

How it Works:

Shared Workspaces: Provide a central hub where teams can collaborate on projects, share code, data, and models.

Version Control: Enable teams to track changes, manage different versions of code and models, and easily revert to previous versions.

Experiment Tracking: Allow teams to log experiments, compare results, and reproduce successful outcomes.

Examples:

MLflow: An open-source platform for managing the end-to-end machine learning lifecycle, including experiment tracking and model management.

Comet ML: A platform for tracking, comparing, explaining, and optimizing machine learning experiments and models.

Weights & Biases: A developer tool for machine learning that provides experiment tracking, model visualization, and collaboration features.

Common Mistakes to Avoid:

Lack of Standardization: Failing to establish clear guidelines for code style, documentation, and experiment tracking.

Poor Communication: Not using the platform's communication features effectively, leading to misunderstandings and delays.

Ignoring Security: Neglecting to implement proper access controls and security measures to protect sensitive data and intellectual property.

Things to Watch Out For:

Integration: Choose a platform that integrates with your existing tools and workflows.

User Adoption: Ensure the platform is user-friendly and provides adequate training and support to encourage adoption.

Scalability: Select a platform that can scale with your team's growing needs.

Tips:

DevOps Principles: Apply DevOps practices like continuous integration, continuous delivery, and continuous monitoring to your AI development process.

Focus on Reproducibility: Ensure your experiments are reproducible by carefully tracking code, data, dependencies, and environment configurations.

Start with a Strong Foundation: Invest time in building a robust data infrastructure and a well-defined AI development process before scaling up.

Iterate and Improve: Continuously evaluate your workflow, identify areas for improvement, and iterate on your processes and tools.

Prioritize Automation: Automate as many tasks as possible, from data ingestion and preprocessing to model training and deployment. Automating tasks like data cleaning and model tuning can significantly reduce development time.

Leverage Cloud Resources: Cloud platforms offer a wealth of services for AI development, including scalable compute, storage, and pre-trained models.

Stay Up-to-Date: The field of AI is constantly evolving. Keep learning about new tools, techniques, and best practices to stay ahead of the curve.

Let's build the future of AI! Efficiently. 🚀