Learn How to Prevent Overfitting in Deep Neural Networks

Deep learning models that work great in training but not in real life? The problem is overfitting. Learn how to build models that generalize like a pro!

Let's be honest, building deep neural networks is a fun but complex task!

You strive to create a model that can comprehend the complexities of your data, but if you push it too far, you risk overfitting.

Your model becomes a memorization whiz, acing the training data but failing miserably on anything new.

Let us look at some useful ways to avoid overfitting and make deep learning models that are great at generalization.

No more training data stars who do not work out in real life!

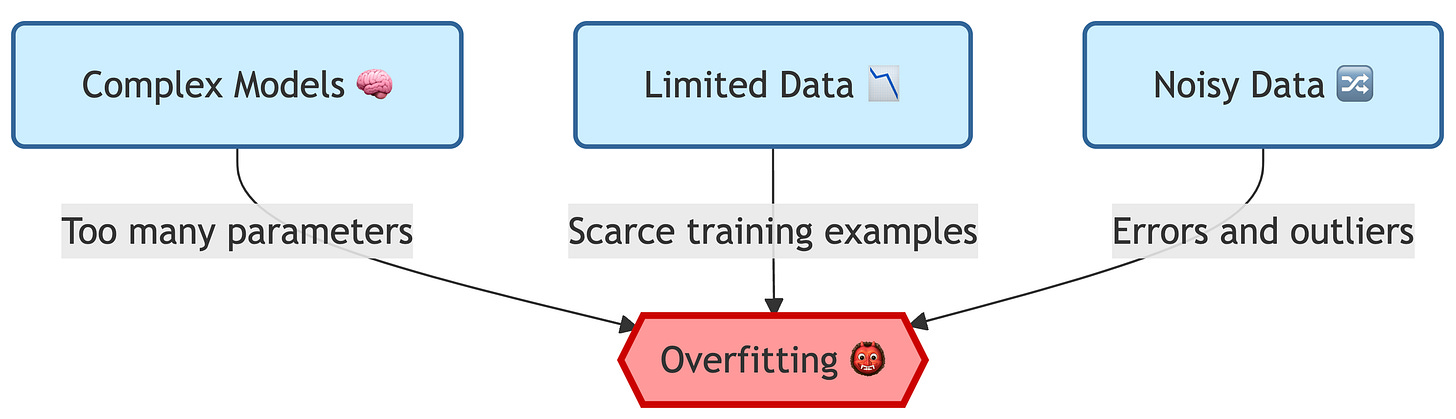

👉 Understand the Overfitting Risks

Overfitting happens when your model learns the training data too well, including its noise and quirks. It's like a student who memorizes the textbook verbatim but can't apply the concepts to new problems.

Common Reasons:

Complex Models: Deep networks with tons of parameters have a high capacity to memorize.

Limited Data: When your training data is scarce, the model might latch onto spurious patterns.

Noisy Data: Errors and outliers in your data can mislead the model.

Spotting the Signs:

Stellar Training Performance, Poor Validation / Test Performance: The classic red flag. 🚩 Your model nails the training set but bombs on unseen data.

High Variance: The model's performance fluctuates wildly between different datasets.

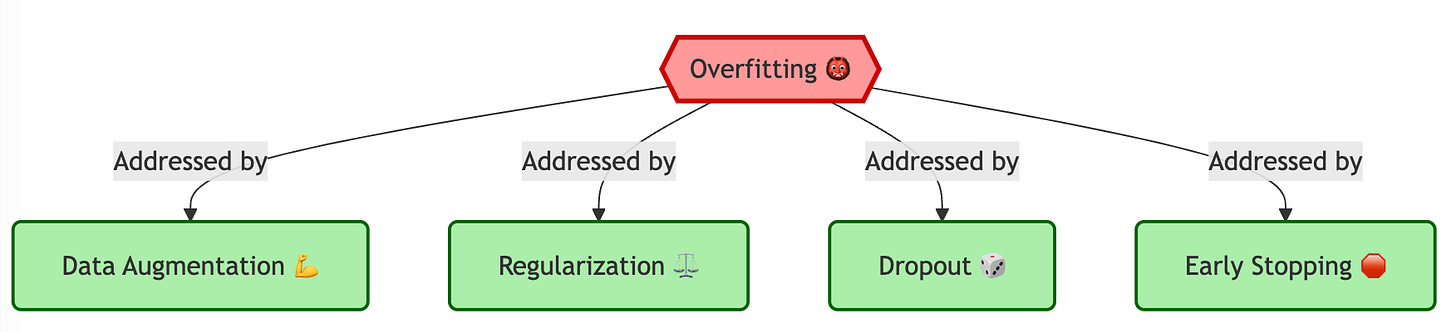

Taming the Beast: Techniques to Prevent Overfitting

Now that we know our enemy, let's arm ourselves with the right tools. Here's your arsenal to combat overfitting:

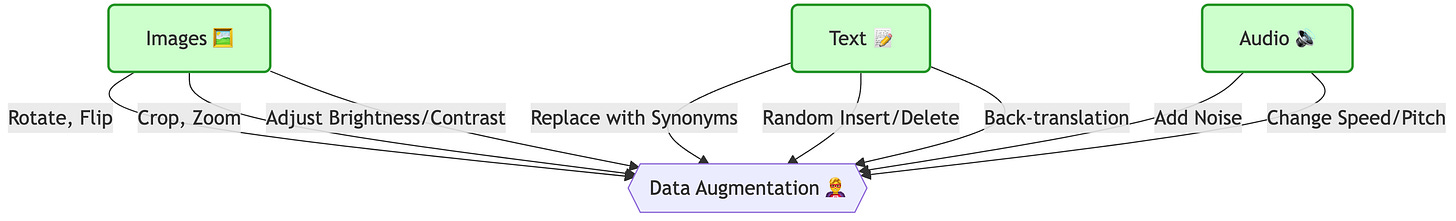

1. Data Augmentation: More Data, More Power 💪

Think of data augmentation as giving your dataset a superhero boost. You're artificially expanding your training data by creating modified versions of existing examples.

How it Works:

Images: Rotate, flip, crop, zoom, adjust brightness/contrast.

Text: Replace words with synonyms, randomly insert/delete words, back-translation.

Audio: Add noise, change speed/pitch.

Example:

Imagine training an image classifier to recognize cats. 🐱 Instead of just feeding it original cat images, you create variations: a rotated cat, a zoomed-in cat, a cat with slightly altered colors. This forces the model to learn the essential features of a cat, not just the specifics of individual images.

Benefits:

Increases Dataset Size: Fights overfitting caused by limited data.

Improves Generalization: The model learns to recognize patterns across variations.

Mistakes to Avoid:

Overdoing It: Too much augmentation can introduce unrealistic data and harm performance. Apply transformations that preserve the data's inherent meaning.

Ignoring Domain Knowledge: Choose augmentation techniques relevant to your data and problem.

2. Regularization: Penalizing Complexity

Regularization is like adding a wise mentor to your model's training process. It discourages the model from becoming too complex and overfitting by adding a penalty to the loss function.

Types of Regularization:

L1 Regularization (Lasso): Adds the absolute value of weights to the loss. Encourages sparsity (some weights become zero), effectively performing feature selection.

L2 Regularization (Ridge): Adds the squared value of weights to the loss. Shrinks weights towards zero, reducing the model's sensitivity to individual data points.

Elastic Net: A combination of L1 and L2, balancing sparsity and weight shrinkage.

Example:

Imagine you're building a model to predict housing prices. L1 regularization might eliminate features that are irrelevant to price prediction (e.g., the number of squirrels in the neighborhood). L2 regularization would reduce the influence of features that are only weakly correlated with price.

Benefits:

Reduces Overfitting: Penalizes large weights, preventing the model from relying too heavily on specific features.

Improves Generalization: Encourages the model to learn simpler, more robust patterns.

Common Wrong Beliefs:

"Regularization always improves performance": Not necessarily. Over-regularization can lead to underfitting, where the model is too simple to capture important patterns.

"L1 is always better than L2": The best choice depends on your specific problem and data.

3. Dropout: Introducing Randomness 🎲

Dropout is like forcing your model to study with random distractions. During training, it randomly "drops out" (deactivates) a fraction of neurons in each layer.

How it Works:

In each training iteration, a different set of neurons is deactivated.

The remaining neurons must pick up the slack, preventing over-reliance on any single neuron.

Example:

Imagine a team working on a project. If one member is randomly absent each day, others must learn to handle their responsibilities. This makes the team more robust and less dependent on any individual.

Benefits:

Reduces Overfitting: Prevents co-adaptation of neurons, forcing them to learn more independent features.

Acts as Ensemble Learning: Each training iteration with dropout is like training a slightly different model. The final prediction is an average over these "mini-models."

Things to Watch Out For:

Dropout Rate: The probability of dropping a neuron (e.g., 0.5 means 50% chance). Too high, and the model might underfit; too low, and the effect is minimal.

Inference Time: Remember to turn off dropout during testing or prediction! ⚠️

4. Early Stopping: Knowing When to Quit 🛑

Early stopping monitors the model's performance on a validation set and stops training when the performance starts to degrade.

How it Works:

Train your model while periodically evaluating its performance on a separate validation set.

Stop training when the validation performance stops improving or starts worsening.

Example:

Imagine you're training for a marathon. You wouldn't keep pushing yourself to exhaustion every day. Instead, you'd track your progress and stop when you're no longer improving or risk injury.

Benefits:

Prevents Overfitting: Stops training before the model starts memorizing the training data.

Saves Time and Resources: Avoids unnecessary training iterations.

Potential Pitfalls:

Patience Parameter: How many epochs to wait for improvement before stopping. Setting it too low might stop training prematurely.

Validation Set Size: A small validation set can lead to noisy performance estimates, making it hard to determine the optimal stopping point.

👉 Advices and Tips: Fine-Tuning Your Approach

These are just some key strategies. To really master overfitting prevention, keep these tips in mind:

Start Simple: Begin with a smaller model and gradually increase complexity if needed.

Cross-Validation: Use techniques like k-fold cross-validation to get a more robust estimate of your model's generalization performance.

Hyperparameter Tuning: Experiment with different regularization strengths, dropout rates, and other hyperparameters to find the optimal settings for your specific problem.

Monitor Training and Validation Curves: Keep a close eye on the loss and accuracy curves during training. They can provide valuable insights into whether your model is overfitting or underfitting.

Use a Larger Dataset if possible: This should be your number one priority, if you have resources for that.

By understanding the causes of overfitting and implementing these techniques, you'll be well-equipped to build deep learning models that are not just accurate on paper but also reliable in the real world. Go forth and conquer overfitting! 🎉

Questions Deepdive:

1️⃣ How can we quantify the trade-off between model complexity and generalization performance when choosing regularization techniques?

The trade-off can be visualized using a validation curve, plotting model performance against varying levels of regularization strength.

Techniques like cross-validation help to evaluate how different levels of complexity (e.g., number of parameters, regularization penalties) impact generalization.

Information criteria like AIC or BIC can also provide a quantitative measure, balancing model fit with complexity.

2️⃣ Beyond standard techniques, what are some unconventional approaches to preventing overfitting that are gaining traction in research?

Adversarial training, where the model is trained on intentionally perturbed data, is one such technique gaining interest.

Bayesian deep learning, which incorporates uncertainty into model parameters, offers a probabilistic approach to mitigating overfitting.

Using knowledge distillation, where a smaller model is trained to mimic a larger, more complex model, can also enhance generalization.

3️⃣ In scenarios with extremely limited data, how can we effectively leverage transfer learning to combat overfitting?

Transfer learning allows us to fine-tune a model pre-trained on a large dataset for a related task, requiring significantly less data for the new task.

By freezing certain layers of the pre-trained model and only training others, we can prevent overfitting on the limited new dataset.

Selecting a pre-trained model whose original task is closely related to the target task is crucial for effective transfer learning.

4️⃣ What are the implications of different types of noise in the training data for overfitting, and how can we tailor our strategies accordingly?

Systematic noise, which consistently skews the data, can lead the model to learn incorrect patterns, while random noise might be averaged out.

Label noise, where the training labels themselves are incorrect, poses a significant challenge and requires specialized techniques like label smoothing.

Tailoring data cleaning and preprocessing steps to the specific type of noise present can significantly improve model robustness.

5️⃣ How does the choice of optimization algorithm interact with the risk of overfitting, and what are some best practices in this regard?

Optimizers like Adam or RMSprop, with adaptive learning rates, might converge faster but can also overfit more readily if not carefully controlled.

Using a learning rate schedule, where the learning rate decreases over time, can help to mitigate this risk.

Techniques like early stopping become even more crucial when using adaptive optimizers to prevent overfitting late in training.

6️⃣ Can we design a feedback mechanism within the training process that dynamically adjusts regularization based on real-time overfitting indicators?

Such a mechanism could monitor metrics like the validation loss and adjust the strength of dropout or L2 regularization accordingly.

Implementing this would require defining clear thresholds and rules for when to increase or decrease regularization.

This could potentially lead to more efficient training and better generalization by automatically finding the optimal regularization balance.

7️⃣ How can concepts from information theory, such as Minimum Description Length (MDL), inform our strategies for preventing overfitting?

MDL suggests that the best model is the one that can compress the data most efficiently, balancing model complexity with goodness of fit.

Regularization can be viewed as a way of implementing the MDL principle, penalizing overly complex models.

This perspective provides a theoretical justification for using techniques that favor simpler models with good generalization ability.