Here's Why Edge AI Beats Cloud AI for Latency-Sensitive Applications

Struggling with AI latency? Cloud's great, but Edge AI slashes delays by processing data closer to the source! Ideal for real-time apps like AVs & AR/VR.

Hey there, AI product leaders and tech decision-makers! 👋

Are you dealing with the decision of where to deploy your AI workloads?

Cloud has been the go-to for a while, but there's a new player gaining serious traction – Edge AI.

For applications where every millisecond counts, the difference between cloud and edge can make or break your product's success.

Let's talk about why Edge AI is leaving Cloud AI in the dust when it comes to latency-sensitive applications. 🏎️💨

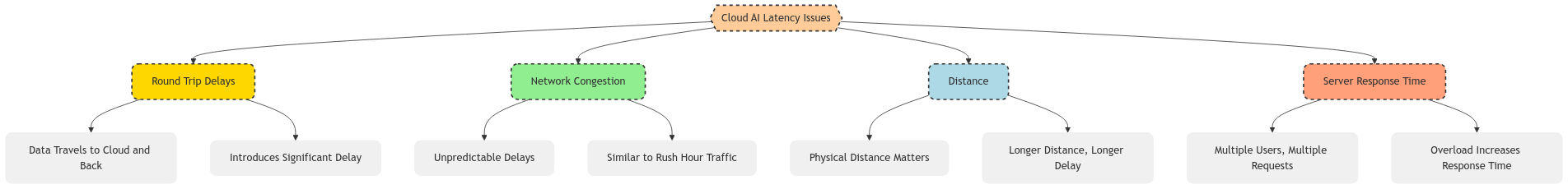

The Cloud's Issue: Latency ☁️

The cloud has revolutionized many aspects of computing, and AI is no exception.

Cloud platforms offer scalability, vast computational resources, and a plethora of pre-built AI services.

But, the cloud has an issue: latency.

➡️ The Problem with Round Trips:

When you rely on the cloud for AI processing, data needs to travel back and forth between the device and the cloud server.

This round trip introduces latency – a delay that can be detrimental to applications requiring real-time or near-real-time responses.

Think about it: even with a fast internet connection, sending data to a distant server, processing it, and receiving the results takes time.

And we are talking just ideal, best-case scenarios here.

➡️ Factors Exacerbating Cloud Latency:

Network Congestion: Just like rush hour traffic, network bandwidth can get congested, leading to unpredictable delays.

Distance: The physical distance between the device and the cloud server plays a significant role. The farther the data has to travel, the longer it takes.

Server Response Time: Cloud servers handle requests from multiple users simultaneously. If the server is overloaded, response times can increase, adding to the overall latency.

➡️ Real-World Examples of Cloud Latency Pain Points:

Autonomous Vehicles: Imagine a self-driving car relying solely on cloud-based AI for object detection and decision-making. A delay of even a few milliseconds in processing sensor data could lead to an accident.

Real-Time Video Analytics: In security systems using AI-powered video analytics, a lag in identifying suspicious activity could render the system ineffective.

Industrial Automation: In a factory setting, robots performing precision tasks need to react instantly to changes in their environment. Cloud latency could disrupt the production process and even cause safety hazards.

AR/VR Applications: Augmented and virtual reality applications demand extremely low latency to create a seamless and immersive user experience. Even slight delays can cause motion sickness and break the illusion.

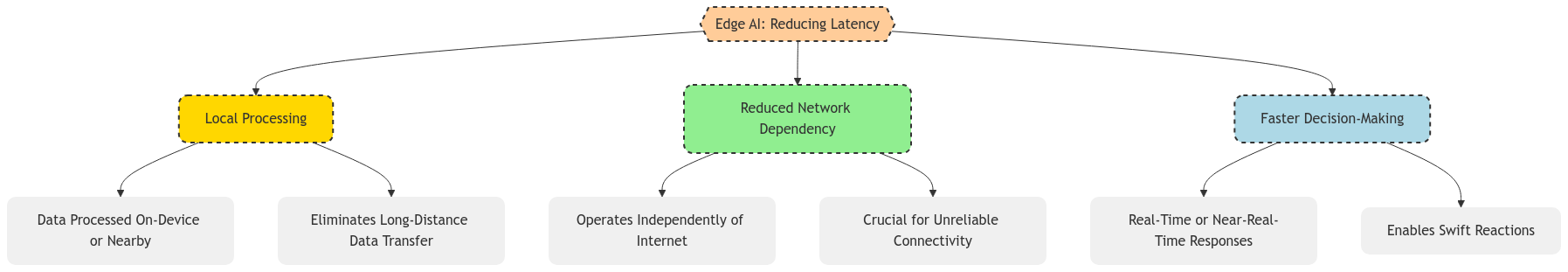

Edge AI: Bringing the Brain Closer to the Action 🧠

Edge AI flips the script by bringing AI processing closer to the data source – the "edge" of the network, where devices reside.

This fundamental shift has profound implications for latency-sensitive applications.

➡️ How Edge AI Slashes Latency:

Local Processing: Instead of sending data to the cloud, Edge AI processes data directly on the device or on a nearby gateway. This eliminates the need for long-distance data transfers, dramatically reducing latency.

Reduced Network Dependency: Edge AI can operate independently of a constant internet connection. This is crucial in areas with unreliable or limited connectivity, such as remote locations or mobile devices.

Faster Decision-Making: With processing happening locally, decisions can be made in real-time or near-real-time, enabling swift responses to changing conditions.

➡️ Edge AI in Action: Real-World Use Cases:

Smart Manufacturing: Edge AI powers robots that can inspect products for defects in real-time, identify anomalies in the production process, and adjust their actions accordingly, all without relying on a cloud connection.

Predictive Maintenance: Sensors embedded in machinery collect data that is analyzed by Edge AI algorithms to predict equipment failures before they occur, minimizing downtime and optimizing maintenance schedules.

Smart Homes: Edge AI enables smart home devices to respond instantly to user commands, control lighting and temperature based on real-time conditions, and enhance security through local facial recognition, all without sending sensitive data to the cloud.

Retail Analytics: Edge AI-powered cameras in retail stores can analyze customer behavior, track inventory levels, and personalize promotions in real-time, enhancing the shopping experience and driving sales.

➡️ Common Misconceptions About Edge AI:

Edge AI is a replacement for Cloud AI: Not necessarily. Edge AI and Cloud AI can work together in a hybrid model, with the edge handling latency-sensitive tasks and the cloud providing centralized management, model training, and large-scale data analysis.

Edge AI is only for simple tasks: Advancements in edge hardware and algorithms are enabling increasingly complex AI workloads to be processed at the edge, including deep learning and computer vision.

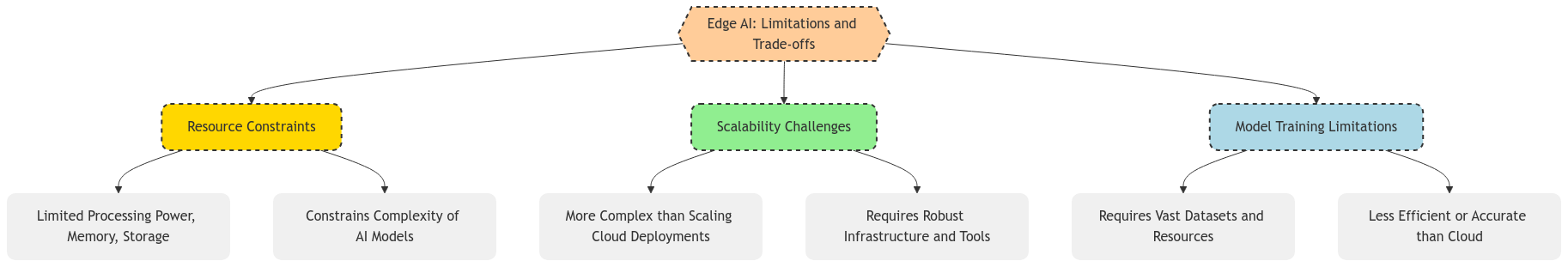

The Trade-Offs: When is Edge AI Not the Right Choice? 🤔

While Edge AI offers significant advantages for latency-sensitive applications, it's not a one-size-fits-all solution. There are trade-offs to consider.

➡️ Resource Constraints:

Edge devices typically have limited processing power, memory, and storage compared to cloud servers.

This can constrain the complexity of AI models that can be deployed at the edge.

Running complex deep learning models on resource-constrained devices can be challenging.

➡️ Scalability Challenges:

Scaling Edge AI deployments can be more complex than scaling cloud deployments.

Managing and updating a large number of distributed edge devices requires robust infrastructure and tools.

➡️ Model Training Limitations:

Training complex AI models often requires vast datasets and computational resources that are typically found in the cloud.

While some model training can be done at the edge (e.g., federated learning), it may be less efficient or less accurate than cloud-based training.

➡️ Examples Where Cloud AI Might Still Be Preferred:

Large-Scale Data Analytics: Analyzing massive datasets to identify trends, patterns, and insights often requires the computational power of the cloud.

Complex Model Training: Training sophisticated deep learning models with millions of parameters typically demands the resources of a cloud environment.

Applications Requiring Centralized Data Aggregation: If your application needs to aggregate and analyze data from multiple sources in a central location, the cloud may be a better choice.

View Forward: The Future is at the Edge (and in the Cloud)

The future of AI is not about choosing between edge and cloud.

It's about leveraging the strengths of both in a complementary way.

We're moving towards a hybrid model where the edge and cloud work together seamlessly, each handling the tasks it's best suited for.

➡️ Predictions and Considerations:

Rise of Hybrid Edge-Cloud Architectures: We'll see more sophisticated architectures that seamlessly integrate edge and cloud processing, enabling dynamic workload allocation based on real-time requirements.

Advancements in Edge Hardware: Expect to see more powerful and energy-efficient edge devices specifically designed for AI workloads, including specialized chips and accelerators.

Federated Learning Gains Traction: Federated learning, a technique that allows training AI models across multiple edge devices without sharing raw data, will become increasingly important for privacy-sensitive applications.

5G and Beyond: The rollout of 5G and future wireless technologies will provide faster and more reliable connectivity, further blurring the lines between edge and cloud.

Edge-as-a-Service (EaaS) Emerges: Just as we have cloud-based services, we can anticipate the emergence of EaaS platforms that simplify the deployment, management, and scaling of Edge AI applications.

The battle between Edge AI and Cloud AI isn't about one winning over the other.

It's about understanding their strengths for different use cases. Use them to build the next generation of intelligent, responsive, and efficient applications. For latency-sensitive use cases, the edge is where the action is. 👾