Here's the Surprising Reason Your MLOps Pipeline is Underperforming

MLOps pipelines often underperform not because of tech or team size but due to data drift. It makes models use outdated data, leading to poor results. Here is how to fix it.

Hey there, AI leaders and machine learning maestros! 👋

Are you pouring resources into your MLOps pipeline, yet still not seeing the results you crave?

You've got the latest tools, a team of brilliant engineers, and a clear roadmap, but something's still off.

Many organizations are struggling to realize the full potential of their MLOps investments.

What if the real bottleneck is something fundamental yet often overlooked? 😮

The Silent Killer: Data Drift's Insidious Impact 📉

We often think of MLOps as a purely technical challenge, focusing on automation, infrastructure, and model deployment. But the truth is, your models are only as good as the data they're trained on. And in the real world, data is rarely static. 😬 It evolves, shifts, and drifts over time, often in subtle ways that can go unnoticed until it's too late.

Data drift, the phenomenon where the statistical properties of the data your model was trained on change over time, is the silent killer of MLOps performance. It's like trying to navigate with an outdated map – your model is making decisions based on a reality that no longer exists.

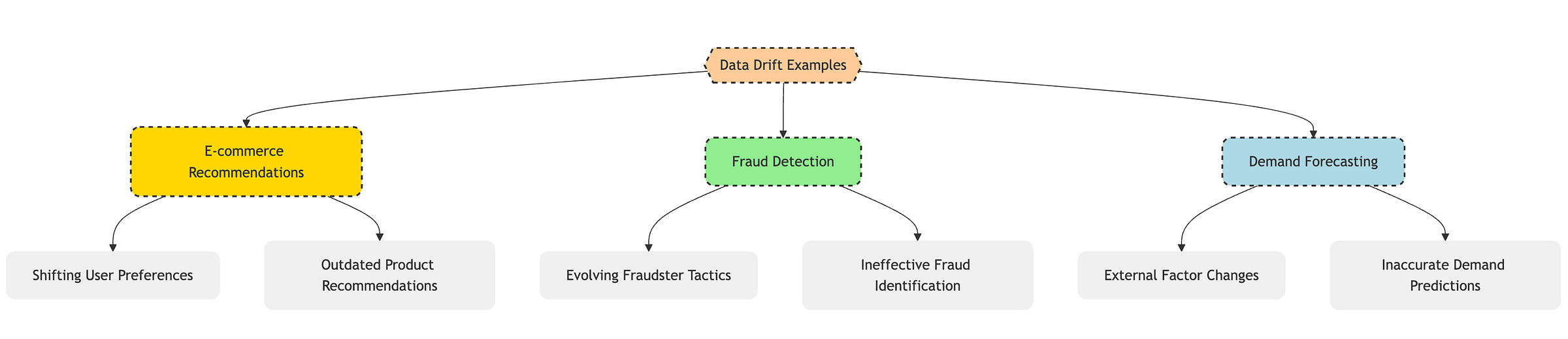

➡️ Examples of Data Drift in Action:

E-commerce recommendation system: Imagine you've built a killer recommendation engine based on user purchase data from last year. But what if user preferences have shifted due to new trends, economic conditions, or even a global pandemic? Your model, still relying on outdated patterns, will start recommending products that users are no longer interested in, leading to decreased engagement and lost sales.

Fraud detection in fintech: Your fraud detection model is trained on historical transaction data. But fraudsters are constantly evolving their tactics. If your model isn't updated to reflect these new patterns, it will become increasingly ineffective at identifying fraudulent activity, leading to financial losses and reputational damage.

Demand forecasting for supply chain: You're using historical sales data to predict future demand. But what if a sudden change in regulations, a new competitor entering the market, or a viral social media trend throws your predictions off? 📈 Your model, unaware of these external factors, will produce inaccurate forecasts, leading to stockouts, overstocking, and supply chain disruptions.

➡️ Common Mistakes That Amplify Data Drift:

"Set it and forget it" mentality: Many teams treat model deployment as a one-time event. They build a model, deploy it, and then move on to the next project, assuming the model will continue to perform well indefinitely. This is a recipe for disaster.

Lack of monitoring and feedback loops: Without proper monitoring in place, data drift can go undetected for months, silently eroding your model's performance. It's crucial to have mechanisms in place to track key data characteristics, model performance metrics, and trigger alerts when significant deviations are detected.

Ignoring the human element: Data drift isn't just a technical problem; it's also a human one. Changes in business processes, data collection methods, or even user behavior can all contribute to drift. It's essential to involve domain experts and stakeholders in the monitoring process to identify and interpret these changes.

The Feature Store Fallacy: Why Tools Alone Aren't Enough 🛠️

In the quest to improve MLOps, many organizations are turning to feature stores as a silver bullet solution. And don't get me wrong, feature stores are powerful tools. They provide a centralized repository for managing, sharing, and serving features, streamlining the model development process and promoting consistency.

But a feature store alone won't solve your data drift problem. 🚫 It can help you manage features, but it won't automatically detect or mitigate drift.

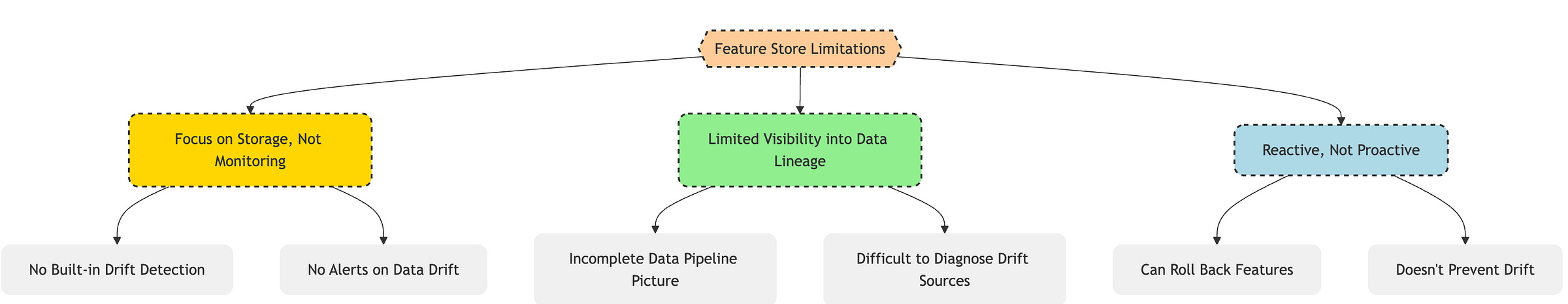

➡️ Why Feature Stores Fall Short on Data Drift:

Focus on storage, not monitoring: Feature stores are primarily designed for storing and serving features, not for monitoring their statistical properties over time. They lack built-in mechanisms for detecting and alerting on data drift.

Limited visibility into data lineage: While feature stores track feature versions, they often don't provide a complete picture of the data pipeline and the potential sources of drift. Understanding how data is collected, processed, and transformed is crucial for diagnosing and addressing drift.

Reactive, not proactive: Feature stores can help you roll back to previous feature versions if drift is detected, but they don't prevent drift from happening in the first place. Proactive approach to data drift management is essential for maintaining model performance.

➡️ What to Do Instead?

Treat feature stores as part of a larger solution: Don't rely on feature stores as your sole defense against data drift. Integrate them with robust data monitoring and validation tools that can track data characteristics, detect anomalies, and trigger alerts.

Implement data quality checks at every stage: Don't wait until data reaches the feature store to check for quality issues. Embed data validation steps throughout the data pipeline, from data ingestion to feature engineering.

Focus on data observability: Go beyond basic monitoring and focus on data observability. Gain deep insights into your data's behavior, track data lineage, and understand the root causes of drift.

The Culture Gap: Bridging the Divide Between Data Science and Engineering 🌉

Even with the best tools and processes, your MLOps efforts will fall short if there's a disconnect between your data science and engineering teams. This "culture gap" is a common challenge in many organizations, and it can have a profound impact on your ability to manage data drift effectively.

Data scientists are often focused on model development and experimentation, while engineers are responsible for deploying and maintaining models in production. This division of labor can lead to a lack of communication, misaligned priorities, and a fragmented approach to MLOps. 💔

➡️ How the Culture Gap Fuels Data Drift:

"Throw it over the wall" mentality: Data scientists may build models in isolation, without considering the operational challenges of deploying and maintaining them in production. This can lead to models that are difficult to deploy, monitor, and update.

Lack of shared ownership: If data scientists don't feel responsible for the performance of their models in production, they may not be proactive about monitoring for data drift or collaborating with engineers to address it.

Siloed tools and processes: Data science and engineering teams may use different tools and processes, making it difficult to share data, collaborate on model development, and establish a unified approach to MLOps. 🗄️

➡️ Closing the Culture Gap:

Foster a culture of collaboration: Encourage data scientists and engineers to work together from the outset of a project. Establish shared goals, metrics, and communication channels.

DevOps principles: Apply DevOps principles to MLOps, promoting continuous integration, continuous delivery, and continuous monitoring. Automate as much of the MLOps pipeline as possible to reduce manual handoffs and streamline the process.

Invest in MLOps platforms: Consider adopting an MLOps platform that provides a unified environment for data scientists and engineers to collaborate, build, deploy, and monitor models.

Promote data literacy across teams: Ensure that both data scientists and engineers have a solid understanding of data drift, its causes, and its impact on model performance. Educate them on best practices for monitoring and mitigating drift.

View Forward: Embracing Proactive Data Drift Management

Data drift is not a problem that can be solved once and then forgotten. It's an ongoing challenge that requires continuous monitoring, adaptation, and collaboration. The future of MLOps lies in embracing a proactive approach to data drift management, one that is deeply integrated into the entire model lifecycle.

➡️ Predictions and Considerations:

Automated drift detection and remediation: We can expect to see more sophisticated tools and techniques for automatically detecting and mitigating data drift. Machine learning models themselves may be used to identify subtle patterns of drift and trigger automated retraining or model updates.

Focus on data-centric AI: The AI community is increasingly recognizing the importance of data quality and data management. We can anticipate a shift towards data-centric AI, where the focus is on building robust, adaptable data pipelines that can handle the dynamic nature of real-world data.

Rise of the "MLOps Data Specialist": As data drift management becomes more critical, we may see the emergence of a new role within MLOps teams: the MLOps Data Specialist. This role will focus specifically on data quality, monitoring, and drift mitigation, bridging the gap between data science and engineering.

Increased regulation and scrutiny: As AI becomes more pervasive, we can expect increased regulation and scrutiny around data privacy, model fairness, and the ethical implications of AI. Organizations will need to demonstrate that their models are robust, reliable, and unbiased, even in the face of data drift.

Greater emphasis on explainability and interpretability: Understanding why a model is making a particular prediction is crucial for diagnosing and addressing data drift. We can expect to see more emphasis on explainable AI (XAI) techniques that provide insights into model behavior and help identify the root causes of performance degradation.